Science

ETRI Proposes AI Safety Standards to ISO for Global Adoption

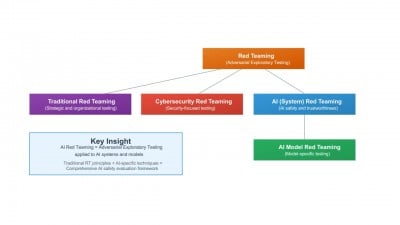

The Electronics and Telecommunications Research Institute (ETRI) has put forward two significant standards to the International Organization for Standardization (ISO/IEC) aimed at enhancing the safety and trustworthiness of artificial intelligence (AI) systems. The proposed standards are the “AI Red Team Testing” and the “Trustworthiness Fact Label (TFL),” marking a proactive step towards addressing the growing concerns related to AI reliability.

The AI Red Team Testing standard seeks to identify potential risks within AI systems before they are deployed. This approach emphasizes a proactive stance, allowing organizations to uncover vulnerabilities and mitigate risks effectively. By simulating various attack scenarios, the standard aims to enhance the resilience of AI technologies.

Simultaneously, the Trustworthiness Fact Label (TFL) standard is designed to provide consumers with clear and accessible information regarding the authenticity of AI systems. The TFL will offer a straightforward labeling system that indicates the level of trustworthiness associated with different AI applications. This initiative aims to empower users, enabling them to make informed decisions when interacting with AI technology.

ETRI has begun full-scale development of these standards, which could significantly influence the landscape of AI governance globally. As AI continues to permeate various sectors, from healthcare to finance, the need for consistent and reliable standards becomes increasingly critical.

Collaborative Efforts in AI Standardization

The initiative reflects a collaborative effort to shape the future of AI safety standards on a global scale. By presenting these proposals to ISO/IEC, ETRI is taking a leading role in fostering international dialogue and cooperation on AI safety.

The organization’s commitment to advancing these standards aligns with global concerns over AI’s rapid evolution and its implications for society. With various countries and industries investing in AI technology, establishing robust safety measures is essential to ensure public trust and minimize risks.

Global Implications and Future Outlook

As ETRI moves forward with the development of these standards, the implications could extend beyond South Korea to influence AI safety protocols worldwide. The establishment of unified standards could pave the way for regulatory frameworks that prioritize consumer safety and ethical AI use.

This proactive approach not only aims to address existing concerns but also sets a precedent for future innovations in AI technology. As the dialogue around AI governance continues, the contributions of organizations like ETRI will be crucial in shaping a safe and trustworthy AI environment for users around the globe.

-

Science4 weeks ago

Science4 weeks agoUniversity of Hawaiʻi Joins $25.6M AI Initiative to Monitor Disasters

-

Lifestyle2 months ago

Lifestyle2 months agoToledo City League Announces Hall of Fame Inductees for 2024

-

Business2 months ago

Business2 months agoDOJ Seizes $15 Billion in Bitcoin from Major Crypto Fraud Network

-

Top Stories2 months ago

Top Stories2 months agoSharp Launches Five New Aquos QLED 4K Ultra HD Smart TVs

-

Sports2 months ago

Sports2 months agoCeltics Coach Joe Mazzulla Dominates Local Media in Scrimmage

-

Politics2 months ago

Politics2 months agoMutual Advisors LLC Increases Stake in SPDR Portfolio ETF

-

Health2 months ago

Health2 months agoCommunity Unites for 7th Annual Walk to Raise Mental Health Awareness

-

Science2 months ago

Science2 months agoWestern Executives Confront Harsh Realities of China’s Manufacturing Edge

-

World2 months ago

World2 months agoINK Entertainment Launches Exclusive Sofia Pop-Up at Virgin Hotels

-

Politics2 months ago

Politics2 months agoMajor Networks Reject Pentagon’s New Reporting Guidelines

-

Science1 month ago

Science1 month agoAstronomers Discover Twin Cosmic Rings Dwarfing Galaxies

-

Top Stories1 month ago

Top Stories1 month agoRandi Mahomes Launches Game Day Clothing Line with Chiefs