Science

Local LLM and NotebookLM Combine for Unmatched Productivity Boost

NotebookLM, a digital research tool, has significantly enhanced productivity for users by integrating with local Large Language Models (LLMs). This hybrid approach allows for improved context and control, addressing common frustrations faced during extensive research projects.

Enhancing Research Workflows

For many professionals, managing large projects often involves navigating a complex digital research workflow. While tools like NotebookLM excel at organizing research and generating source-grounded insights, they can lack the speed and privacy offered by powerful local LLMs. This gap prompted users to experiment with a hybrid model that combines the strengths of both.

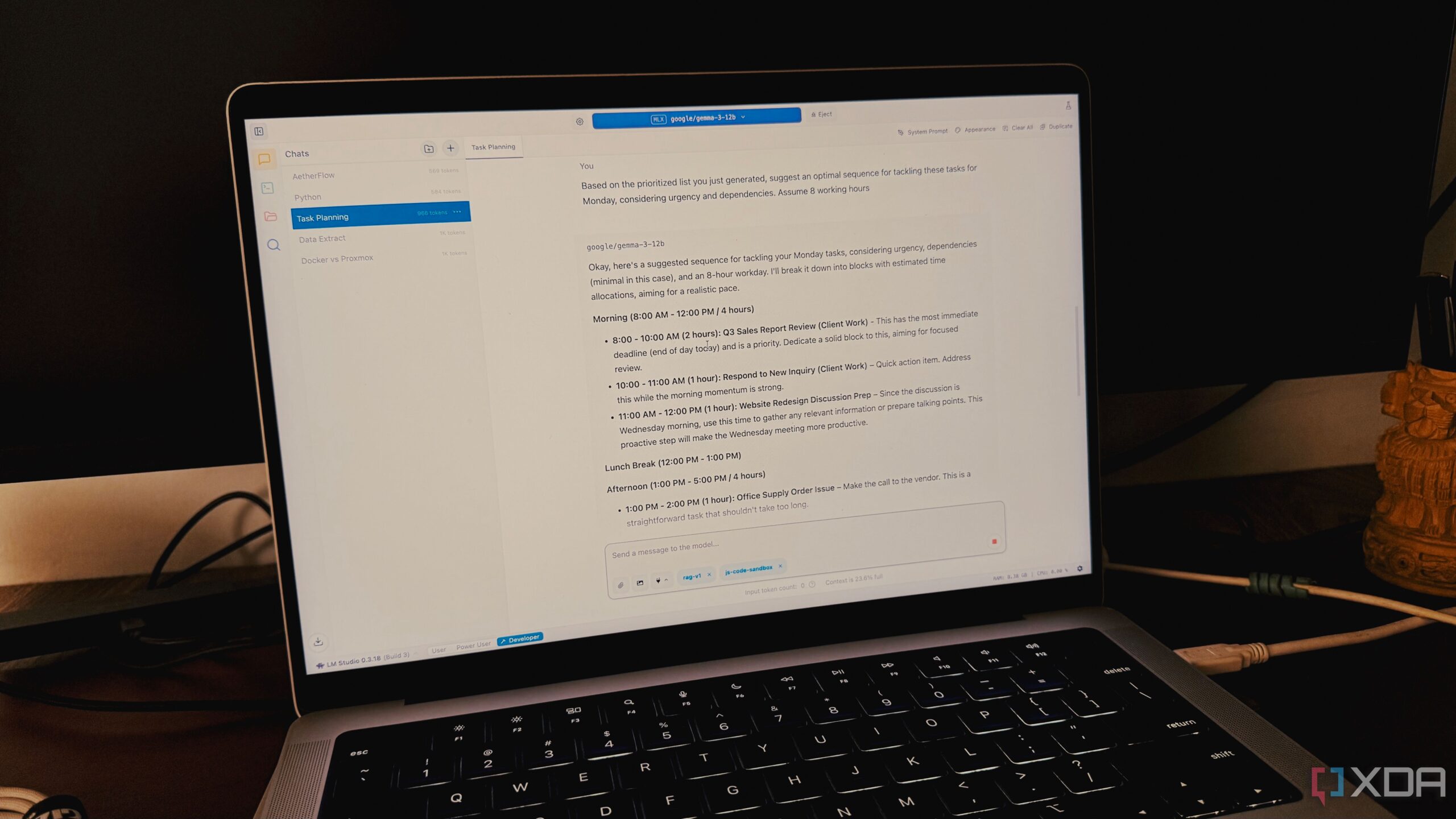

The integration begins with a local LLM setup, such as one powered by a 20B variant of OpenAI’s model running in LM Studio. When tackling broad subjects, like self-hosting applications via Docker, users can quickly generate a structured overview that serves as a foundation for deeper research.

Once the local LLM produces an initial primer—covering key areas like security practices and networking fundamentals—it is transferred to NotebookLM. This process allows the user to leverage NotebookLM’s extensive database of curated sources, including PDF files, YouTube transcripts, and detailed blog posts.

Maximizing Efficiency Through Integration

After loading the structured overview into NotebookLM, users experience a remarkable shift in their workflow. With the ability to ask focused questions, such as, “What essential components and tools are necessary for successfully self-hosting applications using Docker?” users receive rapid and relevant answers.

Another significant feature is audio overview generation. This function creates a personalized summary of the research stack, allowing users to listen to their findings while multitasking. Additionally, the source-checking and citation tool provides instant validation, highlighting where specific facts originated, which saves substantial time on manual cross-referencing.

The combination of local LLMs and NotebookLM has proven to be transformative. Users report moving beyond the limitations of traditional cloud-based or local-only workflows, achieving a new standard of productivity while maintaining control over their data.

In conclusion, this innovative pairing represents a significant advancement in research methodologies. As professionals continue to seek effective solutions for managing complex projects, utilizing both a local LLM and NotebookLM stands out as a promising strategy. This approach not only fosters deep insights but also ensures that users retain the freedom and privacy necessary for their research endeavors.

For those interested in optimizing their productivity further, exploring additional functionalities within this integrated system can yield even greater benefits.

-

Science4 weeks ago

Science4 weeks agoUniversity of Hawaiʻi Joins $25.6M AI Initiative to Monitor Disasters

-

Lifestyle2 months ago

Lifestyle2 months agoToledo City League Announces Hall of Fame Inductees for 2024

-

Business2 months ago

Business2 months agoDOJ Seizes $15 Billion in Bitcoin from Major Crypto Fraud Network

-

Top Stories2 months ago

Top Stories2 months agoSharp Launches Five New Aquos QLED 4K Ultra HD Smart TVs

-

Sports2 months ago

Sports2 months agoCeltics Coach Joe Mazzulla Dominates Local Media in Scrimmage

-

Health2 months ago

Health2 months agoCommunity Unites for 7th Annual Walk to Raise Mental Health Awareness

-

Politics2 months ago

Politics2 months agoMutual Advisors LLC Increases Stake in SPDR Portfolio ETF

-

Science2 months ago

Science2 months agoWestern Executives Confront Harsh Realities of China’s Manufacturing Edge

-

World2 months ago

World2 months agoINK Entertainment Launches Exclusive Sofia Pop-Up at Virgin Hotels

-

Politics2 months ago

Politics2 months agoMajor Networks Reject Pentagon’s New Reporting Guidelines

-

Science1 month ago

Science1 month agoAstronomers Discover Twin Cosmic Rings Dwarfing Galaxies

-

Top Stories1 month ago

Top Stories1 month agoRandi Mahomes Launches Game Day Clothing Line with Chiefs